This report supports something I’ve been talking about for a while:

Major AI chatbots like ChatGPT struggle to distinguish between belief and fact, fueling concerns about their propensity to spread misinformation, per a dystopian paper in the journal Nature Machine Intelligence.

“Most models lack a robust understanding of the factive nature of knowledge — that knowledge inherently requires truth,” read the study, which was conducted by researchers at Stanford University.

They found this has worrying ramifications given the tech’s increased omnipresence in sectors from law to medicine, where the ability to differentiate “fact from fiction, becomes imperative,” per the paper.

“Failure to make such distinctions can mislead diagnoses, distort judicial judgments and amplify misinformation,” the researchers noted.

From a philosophical perspective, I have been extremely skeptical about A.I. from the very beginning. To me, the basic premise of the whole thing has a shaky premise: that what’s been written — and collated — online can form the basis for informed decisionmaking, and the stupid rush by corporations to adopt anything and everything A.I. (e.g. to lower salary costs by replacing humans with A.I.) threatens to undermine both our economic and social structures.

I have no real problem with A.I. being used for fluffy activities — PR releases and “academic” literary studies being examples, and more fool the users thereof — but I view with extreme concern the use of said “intelligence” to form real-life applications, particularly when the outcomes can be exceedingly harmful (and the examples of law and medicine quoted above are but two areas of great concern). Everyone should be worried about this, but it seems that few are — because A.I. is being seen as the Next Big Thing, like the Internet was regarded during the 1990s.

Anyone remember how that turned out?

Which leads me to the next caveat: the huge growth of investment in A.I. is exactly the same as the dotcom bubble of the 1990s. Then, nobody seemed to care about such mundane issues as “return on investment” because all the Smart Money seemed to think that there was profit in them thar hills somewhere, we just didn’t know where.

Sound familiar in the A.I. context?

Here’s where things get interesting. In the mid-to-late 1990s, I was managing my own IRA account, and my ROI was astounding: from memory, it was something like 35% per annum for about six or seven years (admittedly, off an extremely small startup base; we’re talking single-figure thousands here). But towards the end of the 1990s, I started to feel a sense of unease about the whole thing, and in mid-1999, I pulled out of every tech stock and went to cash.

The bubble popped in early 2000. When I analyzed the potential effect on my stock portfolio, I would have lost almost everything I’d invested in tech stocks, and only been kept afloat by a few investments in retail companies — small regional banks and pharmacy chains. I was saved only by that feeling of unease, that nagging feeling that the dotcom thing was getting too good to be true.

Even though I have no investment in A.I. today — for the most obvious of reasons, i.e. poverty — and I’m looking at the thing as a spectator rather than as a participant, I’m starting to get that same feeling in my gut as I did in 1999.

And I’m not the only one.

Michael Burry, who famously shorted the US housing market before its collapse in 2008, has bet over $1 billion that the share prices of AI chipmaker Nvidia and software company Palantir will fall — making a similar play, in other words, on the prediction that the AI industry will collapse.

According to the Securities and Exchange Commission filings, his fund, Scion Asset Management, bought $187.6 million in puts on Nvidia and $912 million in puts on Palantir.

Burry similarly made a long-term $1 billion bet from 2005 onwards against the US mortgage market, anticipating its collapse. His fund rose a whopping 489 percent when the market did subsequently fall apart in 2008.

It’s a major vote of no confidence in the AI industry, highlighting growing concerns that the sector is growing into an enormous bubble that could take the US economy with it if it were to lead to a crash.

In the late 2000s, by the way, anyone with a brain could see that the housing bubble, based on indiscriminate loans to unqualified buyers, was doomed to end bad badly; yet people continued to think that the growth in the housing market was both infinite and sound (in today’s parlance, that overused word “sustainable”). Of course it wasn’t, and guys like Burry made, as noted above, billions upon its collapse.

I see no essential difference between the dotcom, real estate and A.I. bubbles.

The difference between the first two and the third, however, is the gigantic financial upfront investment that A.I. requires in electrical supply in order for the thing to work properly, or even at all. That capacity just isn’t there, hence the scramble for companies like Microsoft to create the capacity by, for example, investing in nuclear power generation facilities — at no small cost — in order to feed A.I.’s seemingly insatiable demand for processing power.

This is not going to end well.

But from my perspective, that’s not a bad thing because at the heart of the matter, I think that A.I. is a bridge too far in the human condition — and believe me, despite all my grumblings about the unseemly growth of technology in running our day-to-day lives, I’m no Luddite.

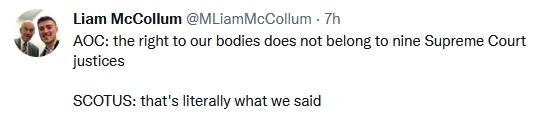

I just try to keep a healthy distinction between fact and fantasy.