I have no idea where this came from, but it’s been here in my head since I woke up.

I have no idea where this came from, but it’s been here in my head since I woke up.

Dropped New Wife off at the airport for her family reunion in Cape Town.

If anyone wants to know where I am, I’ll be in bed, under the covers, whimpering.

And I’m not coming out…

When talking about my recent acquisition, I referred to the S&W .38 revolver as a Model 60 — my mistake, as Reader Ken had originally told me that it was a Model 10 M&P (Military & Police, for those not familiar with S&W’s various nomenclatures).

Of course, I’m an idiot and can only plead Old Fart’s Disease plus (if I may make just a feeble excuse) my complete confusion with Smith & Wesson’s cuneiform-style of model numbering. (And I mean “cuneiform” in terms of its incomprehensibility and not how they write it — although it wouldn’t surprise me if their next revolver model is termed as ![]() a.k.a. M&P, I mean why the hell not?)

a.k.a. M&P, I mean why the hell not?)

Anyway… I seem to have wandered somewhat off the track — yet another symptom of OFD — so let me wind this up by making a fulsome apology.

And yes, I’ve gone full Winston Smith and corrected the earlier post, not because I’m like the New York fucking Times, but because for some reason people sometimes use me as a reference, and I wouldn’t want the mistake to cause confusion.

All that apology stuff aside, I should point out that this lovely gun shoots a lot better than I can shoot it: at 25 ft distance, 2″ groups rested and palm-sized groups offhand. Interestingly, I’m more accurate shooting .38+P than regular .38 Special ammo.

It has replaced the little Model 637 snubbie as an alternative carry piece to the 1911 if my clothing requires a smaller profile.

The only question remaining to be asked is: Why the hell did it take me so long to get one of these fine guns?

[exit, kicking myself]

Your suggestions in Comments.

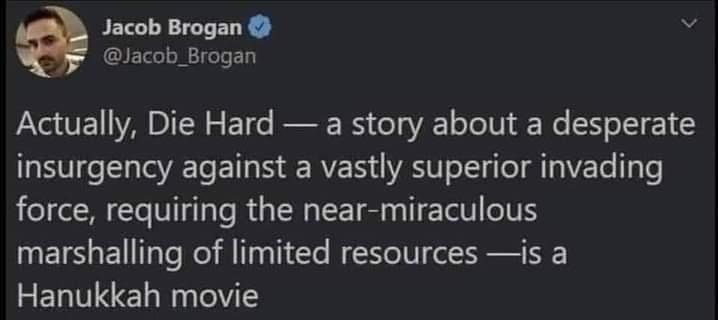

This one’s just for my Tribe Readers:

L’chaim, boychiks.

For the longest time, I would have been one of the loudest voices opposing the idea that we Murkins should copy anything much from the Scandi countries — okay, maybe some of their darkest noir crime TV shows, but not much else.

However, I think that when it comes to immigration policy, there’s much to be learned from the Danes. Watch the video to see how they fixed their erstwhile ruinous position on immigration.

What interests me the most is that highly-restrictive immigration controls, so often a feature of conservative (what they call right-wing) parties, have become very much a part of the Social Democrat (what we would call left-wing) party policy.

You see, the Danes are if nothing else, highly pragmatic in their pursuit of what they consider the ideal society. And yes, while a strong welfare state is the sine qua non of Danish society, they also understand that without social cohesion, a welfare state is not a tenable system. Those two pillars — the welfare system and social cohesion — form the foundation of their society, and what the Danes realized, long before any of their European neighbors did, that untrammeled immigration of Third Worlders of the Arab Muslim and African persuasion was rending their social cohesion asunder, and undermining their cherished welfare state.

You have to hand it to them for swinging their immigration system by 180 degrees: in fact, it’s harder to immigrate into Denmark than it is into the United States because the Danish requirements for residency are unbelievably restrictive, including such concepts as civic indoctrination and the linking of conformity to any kind of welfare. If you don’t fit in, the Danes will force you to fuck off back to your shithole country of origin, with neither remorse nor pity on their part.

And naturalized Danish citizenship is almost impossible to come by without lengthy permanent residency and complete assimilation via a rigorous civics examination process. (Fail that test, and you’re on the next plane back to Shitholistan.)

I would really, really like to see that happen Over Here.

I’ll leave it to y’all to decide, though, how likely it is that the foul Democrat-Socialist Party of today would perform a similar change in their position on immigration. And quit laughing.

We need more attitude like this:

“If you don’t share our values, contribute to our economy, and assimilate into our society, then we don’t want you in our country.”

No, that wasn’t the Danish PM. That was President Donald J. Trump, December 2025.